Oops -- corrected...I think you meant Logic is shrinking faster than Caches no?

Array

(

[content] =>

[params] => Array

(

[0] => /forum/threads/can-intel-recover-even-part-of-their-past-dominance.23972/page-4

)

[addOns] => Array

(

[DL6/MLTP] => 13

[Hampel/TimeZoneDebug] => 1000070

[SV/ChangePostDate] => 2010200

[SemiWiki/Newsletter] => 1000010

[SemiWiki/WPMenu] => 1000010

[SemiWiki/XPressExtend] => 1000010

[ThemeHouse/XLink] => 1000970

[ThemeHouse/XPress] => 1010570

[XF] => 2030770

[XFI] => 1060170

)

[wordpress] => /var/www/html

)

Guests have limited access.

Join our community today!

Join our community today!

You are currently viewing SemiWiki as a guest which gives you limited access to the site. To view blog comments and experience other SemiWiki features you must be a registered member. Registration is fast, simple, and absolutely free so please, join our community today!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Can Intel recover even part of their past dominance?

- Thread starter Arthur Hanson

- Start date

Xebec

Well-known member

Looking back at how Intel lost it's dominance, it's almost as if someone came from the future to sabotage the company:

- 2005, Intel's Board rejects Otellini's pitch to buy Nvidia for $20B

~ 2006, right before ARM and Mobility takes off, Intel divests it' StrongARM/Xscale effort. (AMD made a similar mistake that led to Qualcomm's Adreno)

~ 2007, Otellini nopes the iPhone

~ 2011, Intel's GPU efforts may have been doomed, but they exited entirely at this point with Larrabee and no replacement, just as GPUs really took off

~ 2013, Intel hires a CEO that reinforces the most complacent behavior possible - not taking AMD or TSMC seriously

~ 2018-2019, Bob Swan hedges bets with TSMC N3; combined with the strategic shift with "5 Nodes 4 Years", Bob's (then Pat's) actions ensure that Intel internal nodes will get even more expensive over the next 5 years then they would have been otherwise

~ 2022, Intel fully drops Optane, just as AI demand starts and could probably use this technology as 'higher capaicty / lower cost DRAM-like' memory

~ 2022, Intel fully fumbles the ARC Alchemist launch (Raja..) , damanging their future ability to respond to GPU demand

(Dates are from memory and may be off)

Too early to call LBT's efforts - they look good so far, but the 14A "may not be developed" disclosure in the financials signals that Intel may be ready to give up on node development. Not a sign of confidence for future potential fab customers..

- 2005, Intel's Board rejects Otellini's pitch to buy Nvidia for $20B

~ 2006, right before ARM and Mobility takes off, Intel divests it' StrongARM/Xscale effort. (AMD made a similar mistake that led to Qualcomm's Adreno)

~ 2007, Otellini nopes the iPhone

~ 2011, Intel's GPU efforts may have been doomed, but they exited entirely at this point with Larrabee and no replacement, just as GPUs really took off

~ 2013, Intel hires a CEO that reinforces the most complacent behavior possible - not taking AMD or TSMC seriously

~ 2018-2019, Bob Swan hedges bets with TSMC N3; combined with the strategic shift with "5 Nodes 4 Years", Bob's (then Pat's) actions ensure that Intel internal nodes will get even more expensive over the next 5 years then they would have been otherwise

~ 2022, Intel fully drops Optane, just as AI demand starts and could probably use this technology as 'higher capaicty / lower cost DRAM-like' memory

~ 2022, Intel fully fumbles the ARC Alchemist launch (Raja..) , damanging their future ability to respond to GPU demand

(Dates are from memory and may be off)

Too early to call LBT's efforts - they look good so far, but the 14A "may not be developed" disclosure in the financials signals that Intel may be ready to give up on node development. Not a sign of confidence for future potential fab customers..

Because no other chip's power consumption accounts for 80% of total cost.Adding lots of latches/D-types to shorten pipelines (e.g. to "double frequency") does put clock rates up, but usually increases power per gate transition/operation because the D-types don't contribute any useful function, they just take power (both to propagate data and for clocking). Yes this increases OPS/mm2 and clock rate, but usually total power for a given function also increases -- this is what Intel found out the hard way with NetBurst... ;-)

We've done exactly this comparison many times in DSP design, and the conclusion is always that it's better to have more gate depth between latches and fewer latches and clock more slowly, so long as you can afford the extra silicon area because more parallel paths running more slowly decreases power but increases area for the same task.

This may not work for bitcoin miners because they also have to worry about die size/cost and squeezing more MIPs out of each mm2, and in this case extra pipelining might help meet this requirement.

Let's check 5nm as an example. If Latch/DFFs account for 30% power.

Double the Latches will have the power to 1.3X.

Lower the voltage from 0.4 -> 0.3 will have power at (0.3/0.4)^2 = 0.5625.

Since the Vth is around 0.2, so the speed will be same.

Dynamic latch is very small, maybe 5% area.

1.3 * 0.5625 * 1.05 = 0.77.

You can reduce costs by 20%, which could double your profit.

You're talking about architecture changes here, all of which which are perfectly valid but are independent of the tradeoff between PDP and voltage. That suggests to me that you're well familiar with optimizing architectures (gate level design), but not with optimizing library conditions (transistor level design and choice of library operating conditions i.e. building custom gate libraries).Because no other chip's power consumption accounts for 80% of total cost.

Let's check 5nm as an example. If Latch/DFFs account for 30% power.

Double the Latches will have the power to 1.3X.

Lower the voltage from 0.4 -> 0.3 will have power at (0.3/0.4)^2 = 0.5625.

Since the Vth is around 0.2, so the speed will be same.

Dynamic latch is very small, maybe 5% area.

1.3 * 0.5625 * 1.05 = 0.77.

You can reduce costs by 20%, which could double your profit.

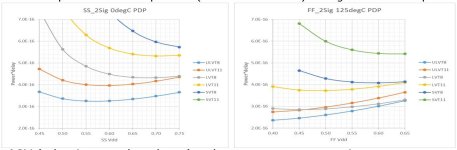

Regardless of the architecture or circuit or process node, you *always* get PDP curves like the ones I posted, and there's *always* a voltage where PDP is minimized -- this moves around with process corner and exact circuit design and clock rate (which trades off dynamic vs. leakage power), but is basically defined by device threshold voltage because below this current drops exponentially (subthreshold slope, causes leakage) and above this current rises roughly as (Vgs-Vth)^2, at least for small overdrives which we're dealing with here for low-voltage operation.

With higher Vth (e.g. LVT/SVT gate, slow process corner, low temperature) the minimum PDP is both higher and occurs at a higher voltage, with lower Vth (e.g. ULVT gate, fast process corner, high temperature) the minimim PDP is lower and occurs at a lower voltage -- here are some results for N7 again showing these two extreme cases. Even for the fastest gate type (ULVT) the minimum PDP in the slow/cold corner is about 0.57V, in the fast/hot corner it's off the LH side of the plot around 0.33V, for typical conditions (not shown) it's around 0.45V.

The majority of this shift is due to process corner not temperature, and there's nothing you can do about this unless you're willing to lose a lot of yield at either chip or even wafer level. The voltage for minimum PDP hardly changes with process node because it's pretty much linked to Vth, the curves all the way from N7 down to N2 (I've done this analysis for every node) are pretty much similar except that they all move downwards each node (lower power consumption, lower gate capacitance, higher gate speed). And I have *never* seen a case where chips from the middle of the process spread have minimum PDP at 0.32V.

In practice you need to decide which power consumption to minimise, the maximum allowed (slow process corner), typical, or somewhere in between. You can't pick the ultra-low voltage for the fast corner (e.g. 0.33V) and claim this as your "operating Vdd" because hardly any chips will run fast enough at such a low voltage. This assumes that you need a constant level of processing power, on top of this if there are cases where this is lower (e.g. a CPU) then of course you can drop the supply voltage and save power by reducing both dynamic and leakage power -- but if you're just hammering away crunching data all the time (like a bitcoin miner?) you can't do this.

And just to be even clearer, to do all this you need to build custom cell libraries which meet your operating conditions, and have circuits on-chip which measure gate delay or circuit speed, and have an individually-adjustable supply *per chip*. You can't use standard cell libraries from the vendors (e.g. TSMC, Synopsys) because these invariably have corners which are based on standard supply tolerancing and are "the wrong way round" (e.g. SpeedMin=slow process/cold/Vddmin, Power_max=fast process/hot/Vddmax).

This is all just to minimise power consumption for a given function; if you want to minimise die area/cost ("double your profit") the tradeoffs are completely different, you want to run at a higher voltage to get more throughput per mm2, the result will be a smaller cheaper chip but one that dissipates more power.

Attachments

Last edited:

I might be telling my granny to suck eggs, but make sure you use realistic values for gate type (not just inverters), fanout (to match real circuit, typically 3 or so), tracking load, and interconnect resistance (advanced technologies are often interconnect-dominated) -- otherwise you can get unrealistic results... ;-)Good discussion. I'm going to run spice today.

MKWVentures

Moderator

I think you misinterpret decisions required due to failure with poor strategy. No company, including Intel can do what ever it puts its mind to.Looking back at how Intel lost it's dominance, it's almost as if someone came from the future to sabotage the company:

As I have said before, Intel was a leader in Mobile strategy, GPU strategy, AI accelerator strategy. Cost effective execution was the weakness.

Intel cannot do graphics, they are too slow and failed (larrabee wasnt cancelled. it failed)

Optane is 5x slower than DRAM, no one wanted it and intel delayed it too long. It was cancelled when they realized there were no sales.

Intel could not do mobile, they are too expensive and slow. They did many leading edge parts and lost billions

Intel decided to outsource manufacturing because internal was 2x the price and not a node ahead. 2027 will tell us if anything changed.

Pat decided to do foundry (Knowing that Intel loses tons on manufacturing), but Intel was arrogant and told customers what they should do and no one signed up but the USG (LBT fixed that).

Intel purchase multiple AI companies.... and derailed them so they disappeared.

LBT talked about cancelling 14A since no one was committing to it and it costs billions per year to keep development going. We will see if it changes.

Side note: what if during that time, Intel had decided to outsource manufacturing (like Nvidia, Apple, Broadcom, Qualcomm, AMD, IBM) and put the resources into CPUs and Accelerators?

Intel is a very smart compute company that was very slow, expensive and customer unfriendly. LBT can make them a very smart compute company that prioritizes its strengths not its weaknesses. Not to regain 1995.... but succeed in 2028.

siliconbruh999

Well-known member

i don't know where did you get this but they can do graphics and their new IP is wayyy better than larabee like they are beating AMD with their product in many casesIntel cannot do graphics, they are too slow and failed (larrabee wasnt cancelled. it failed)

On this one Intel shot themselves in the foot by restricting itself to Intel products only. The tech is very good and would have been a money printing machine in AI but it doesn't exist.Optane is 5x slower than DRAM, no one wanted it and intel delayed it too long. It was cancelled when they realized there were no sales.

same result tbh it's not just foundry design fumbled as well you can see it with MTL/ARL their GPU designs are bad in perf/mm2 but the architecture is good.Side note: what if during that time, Intel had decided to outsource manufacturing (like Nvidia, Apple, Broadcom, Qualcomm, AMD, IBM) and put the resources into CPUs and Accelerators?

Yeah only one acquisition was successful Movidius that's why i don't like Intel buying SamanovaIntel purchase multiple AI companies.... and derailed them so they disappeared.

They have enough money to take a look into every technology but not enough money to invest into every technology.I think you misinterpret decisions required due to failure with poor strategy. No company, including Intel can do what ever it puts its mind to.

As I have said before, Intel was a leader in Mobile strategy, GPU strategy, AI accelerator strategy. Cost effective execution was the weakness.

siliconbruh999

Well-known member

Powered by Nvidia?Private Girls From Your City - No Verify - Anonymous Sex Dating

https://privateladyescorts.com

Private Lady In Your Town - Anonymous Adult Dating - No Verify

Did you try to click through?Powered by Nvidia?

It leads to amazon. Do’h

siliconbruh999

Well-known member

I don't click shady linksDid you try to click through?

It leads to amazon. Do’h

I needed to look that one up. I learned that "horses were for courses" from you a few years back. Only somebody in the land of steeplechases would come up with that. Your technical tidbits are appreciated. You just came up with an new concern that I didn't worry about in the past... the resistance of the low level interconnect. I typically just slap down a horizonal M4 preroute with an M5 hit point, then autoroute and just do Cx below M4. Our tools (we compete with VXL) allows the user fetch the RCx below M4, but not by default.I might be telling my granny to suck eggs

I get a feeling that you do RCx down to the bone. Is that true? What process and what frequency?

Xebec

Well-known member

LBT talked about cancelling 14A since no one was committing to it and it costs billions per year to keep development going. We will see if it changes.

Good responses btw.

For LBT + 14A, I understand why he did it (and he may have had no choice), but it also rings a bit as 'shooting yourself in the foot' if Intel is serious about it's Foundry strategy. Signalling you're about to capitulate on future fabs could become a self-fulfilling prophecy in this case. i.e. Warn the customers your fab future is (possibly) dead, and your fab future will certainly become dead. Bean counters (who often rule companies) aren't going to recommend risking fabbing with Intel under these circumstances.

Intel cannot do graphics, they are too slow and failed (larrabee wasnt cancelled. it failed)

Optane is 5x slower than DRAM, no one wanted it and intel delayed it too long. It was cancelled when they realized there were no sales.

Intel could not do mobile, they are too expensive and slow. They did many leading edge parts and lost billions

Intel decided to outsource manufacturing because internal was 2x the price and not a node ahead. 2027 will tell us if anything changed.

Pat decided to do foundry (Knowing that Intel loses tons on manufacturing), but Intel was arrogant and told customers what they should do and no one signed up but the USG (LBT fixed that).

Intel purchase multiple AI companies.... and derailed them so they disappeared.

LBT talked about cancelling 14A since no one was committing to it and it costs billions per year to keep development going. We will see if it changes.

Side note: what if during that time, Intel had decided to outsource manufacturing (like Nvidia, Apple, Broadcom, Qualcomm, AMD, IBM) and put the resources into CPUs and Accelerators?

Intel is a very smart compute company that was very slow, expensive and customer unfriendly. LBT can make them a very smart compute company that prioritizes its strengths not its weaknesses. Not to regain 1995.... but succeed in 2028.

I agree Intel cannot succeed at everything, but they've failed as so much - I was just observing it's interesting that literally every chance they had to succeed was squandered. Intel wasn't always this way (this thread is about - Can Intel regain it's former glory) -- they figured out a way to pivot really fast to microprocessors from memory in the early 1980s, and they also pivoted very fast in Foundry to stay ahead of competition through the 1990s and most of the 2000s. They *can* execute, but it seems starting around the Craig Barrett/Otellini transition time - their ability to execute successfully greatly diminished.

My thesis is just that - if someone could travel back in time and sabotage a company to prevent it from succeeding -- Intel looks like that blueprint. Whether the failure was strategic or execution is sorta moot -- Intel just flat out failed on several major market transitions in a row, and certainly hasn't proven yet they can change that.

I think you misinterpret decisions required due to failure with poor strategy. No company, including Intel can do what ever it puts its mind to.

As I have said before, Intel was a leader in Mobile strategy, GPU strategy, AI accelerator strategy. Cost effective execution was the weakness.

Part of good strategic decision making is knowing what you can and can't do. Intel failing to execute several times in a row indicates a strategic thinking failure too. Where was the strategy to be cost effective that should have happened at least 6 times by now?

Really? Intel's integrated CPU graphics are arguably the world's highest volume production graphics. Datacenter and supercomputing GPUs were delivered, but Intel wasn't serious enough in time for the emergence of transformer neural networks, and Nvidia (and arguably AMD) had substantial technology leadership. I think your judgments about what Intel can and can't do, or any other company's abilities, are biased. It's just a matter of corporate leadership and hiring the right people.Intel cannot do graphics, they are too slow and failed (larrabee wasnt cancelled. it failed)

Optane is slower than DRAM, but the reasons Optane failed are not so simple as you post.Optane is 5x slower than DRAM, no one wanted it and intel delayed it too long. It was cancelled when they realized there were no sales.

1. Optane had better endurance than NAND Flash, but not unlimited endurance like DRAM. So for any DRAM-related uses Optane needed a DRAM cache in the memory controller to reduce write traffic. The MC cache requirement made Optane impractical for use as a DRAM extender by other CPU providers, such as AMD or IBM, so it was never licensed.

2. Optane could be used to make compelling SSDs initially, since it was a non-volatile technology, and was very useful for file system logs and transaction processing and database logs, but Optane's layering technology, called "decks", were far more expensive to implement than NAND flash layers. So over time there was going to be an ever-widening gap between flash storage cost per byte and Optane's storage cost per byte, and the gap would overwhelm Optane's advantages in endurance and access speed. So, in the long run, without a manufacturing breakthrough that was apparently not on the horizon, Optane could not be competitive in SSDs.

Since Optane was uncompetitive cost-wise in storage and had implementation complexity issues for DRAM extension, it had no practical high-volume markets. I also suspect, but have not seen proof, that Optane manufacturing costs were too high to be competitive over the long run.

I'm not convinced of the slowness assertion, but Intel's fab processes were aimed at high performance CPUs with a power-be-damned objective to get the highest clock speeds. On the too expensive point, I think that's fair and accurate.Intel could not do mobile, they are too expensive and slow. They did many leading edge parts and lost billions

I agree. Google has proven that dedicated AI processors are practical, but owning the entire software stack was apparently too daunting to Intel. Amazon is also proving that dedicated AI chips are practical when you control the entire stack, but Google and Amazon are not merchant chip vendors. Nvidia and AMD took a more evolutionary GPU approach, and it appears Intel did not have the investment endurance to go down that path, so they kept trying the dedicated AI design approach (Nervana and Habana). Dedicated accelerators are always more complex to bring to market than more general purpose approaches. I'm also having difficulty getting excited about SambaNova for inference. AI technology looks like it's probably changing too quickly for narrow dedicated processors from merchant vendors. In Google and Amazon's cases, because they own the basic AI research and the entire software stack, they can see the software requirements coming more than one generation away.Intel purchase multiple AI companies.... and derailed them so they disappeared.

BruceA

Well-known member

What does dominance mean?

Would TSMC have done Strained Silicon, Gate Last, FinFET, Nanosheet, or Backside if Intel hadn't. TSMC has always been a fast follower taking little steps and minimal innovation to keep risk low.

They'd have not announced A16 if Intel hadn't announced their backside before product launch.

No Intel can continue to lead on the technology front, but on the business front barring a crazy Xi move TSMC will be the dominant chip supplier for the next decade

I will look for intel if they can get some customer on 18A and 14A to lead on technology. Hopefully they will keep their technology closer to chest like they did in the 90s with strained silicon, Gate Last and FinFet.

Would TSMC have done Strained Silicon, Gate Last, FinFET, Nanosheet, or Backside if Intel hadn't. TSMC has always been a fast follower taking little steps and minimal innovation to keep risk low.

They'd have not announced A16 if Intel hadn't announced their backside before product launch.

No Intel can continue to lead on the technology front, but on the business front barring a crazy Xi move TSMC will be the dominant chip supplier for the next decade

I will look for intel if they can get some customer on 18A and 14A to lead on technology. Hopefully they will keep their technology closer to chest like they did in the 90s with strained silicon, Gate Last and FinFet.