The defining characteristic of In-Circuit-Emulation (ICE) has been that the emulator is connected to real circuitry – a storage device perhaps, and PCIe or Ethernet interfaces. The advantage is that you can test your emulated model against real traffic and responses, rather than an interface model which may not fully capture the scope of real behavior. These connections are made through (hardware) speed bridges which adapt emulator performance to the connected device. And therein lies (at times) a problem. Hardware connections aren’t easy to virtualize, which can at times impede flexibility for multi-interface and multi-user virtual operation.

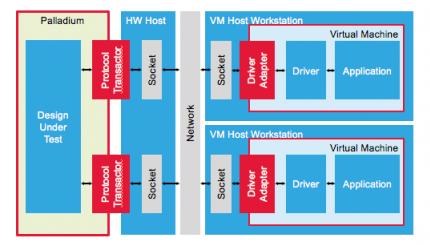

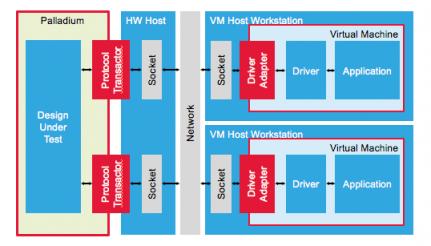

A case of particular interest, where a different approach can be useful, arises when the “circuit” can be modeled by one or more host workstations; where say multiple GPUs modeled on the emulator may be communicating through multiple PCIe channels with host CPU(s). Cadence now supports this option through Virtual Bridge Adapters for PCIe. This is a software adapter, allowing OS and user applications on a host to establish a protocol connection to the hardware model running on the emulator. As is common in these cases, one or more transactors running on the emulator manage transactions between the emulator and the host.

I wrote about this concept earlier in a piece on transaction-based emulation, but of course a general principle is one thing – a fully-realized PCIe interface based on this principle is another. This style of modeling comes with multiple advantages: low-level software can be developed/debugged against pre-silicon design models, this style can support multiple users running virtualized jobs on the emulator and users can model multiple PCIe interfaces to their emulator model. Also, and this is a critical advantage, the adapter provides a fully static solution. Clocks can be stopped to enable debug/state dump or to insert faults without the interface timing-out, something which would be much more challenging with a real hardware interface.

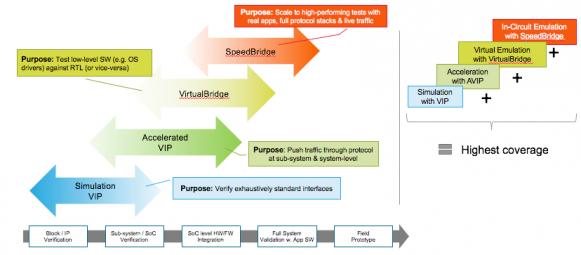

Frank Schirrmeister pointed out how this fills a verification IP hole in the development flow. In IP and subsystem development, you’ll validate protocol compliance against simulation VIPs or accelerated equivalents running on an emulator. When you want high confidence that your design behaves correctly in a real system handling real traffic, you’ll use an ICE configuration with speed-bridges. In-between there’s a place for virtual emulation using virtual bridge adapters. In the early stages of system development, there’s a need to validate low-level software (e.g. drivers) for those external systems, before you’re ready to move to full ICE with external motherboards and chipsets. Modeling using virtual bridge adapters provides a way to support this.

Frank offered two customer case-studies in support of this use model. Mellanox talked at CDNLive in Israel about using virtual adapters and speed bridges in a hybrid mode for in-circuit-acceleration (ICA). They indicated that this provides the best of both worlds – speed and fidelity in the stable part of the system circuit and flexibility/adaptability in software development and debug for evolving components.

Nvidia provided a more detailed view of how they see the role of ICE and virtual bridging. First for them there is no question that (hardware-based) ICE is the ultimate reference test platform. They find it to be the fastest verification environment, proven and ideal for software validation and it has the flexibility and fidelity to test against real-world conditions, notably including errata (something that might be difficult to fully cover in a virtual model). However, applying only this approach is becoming more challenging in the development phase as they must deal with an increasing number of PCIe ports, more GPUs and more complex GPU/CPU traffic, along with a need to support new and proprietary protocols.

For Nvidia, virtual bridge adapters provide help in emulation modeling for these needs. Adding more PCIe ports becomes trivial since they are virtual. They can also provide adapters for their own proprietary protocols and support both earlier versions and the latest revisions. As mentioned above, the ability to stop the clock greatly enhances ease of debug while in development. At the same time Nvidia were quick to point out that virtual-bridge and speed-bridge solutions are complimentary. Speed bridges give higher performance and ensure traffic fidelity. Virtual bridges provide greater flexibility earlier in the development cycle. Together these fill critical and complementary needs.

The big emulation providers have at times promoted ICE over virtualization or vice-versa; perhaps unsurprisingly the best solution now looks a combination of both solutions. As always, customers have the final say. You can watch Nvidia’s comments on the Palladium-based solutions HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.