The basic principles used for neural networks have been understood for decades, what have changed to make them so successful in recent years are increased processing power, storage and training data. Layered on top of this is continued improvement in algorithms, often enabled by dramatic hardware performance improvements. There was a time not all that long ago when classifying objects in a still picture was impressive – and this was often done with training and classification running on large servers. The growing demand for autonomous vehicles has raised the bar. What is needed is the ability to perform real-time detection and recognition of objects at high framerates within the power, size and reliability constraints of automotive systems.

Recently in San Jose the Autonomous Hardware Summit brought together innovators in this field to discuss the latest technology trends. Autonomous vehicles must be able to identify and classify multiple objects in each frame of high resolution images. Frame rates must be high enough to keep up with high vehicle speeds. One new neural network type is extremely good at this. It is known as You Only Look Once (YOLOv3) and avoids the problem older approaches have with needing to break each frame up into separate identification tasks based on the detection of potential objects in various parts of the image. In previous techniques, each of these candidates needed to be run through a separate recognition step to determine what if anything is in the region.

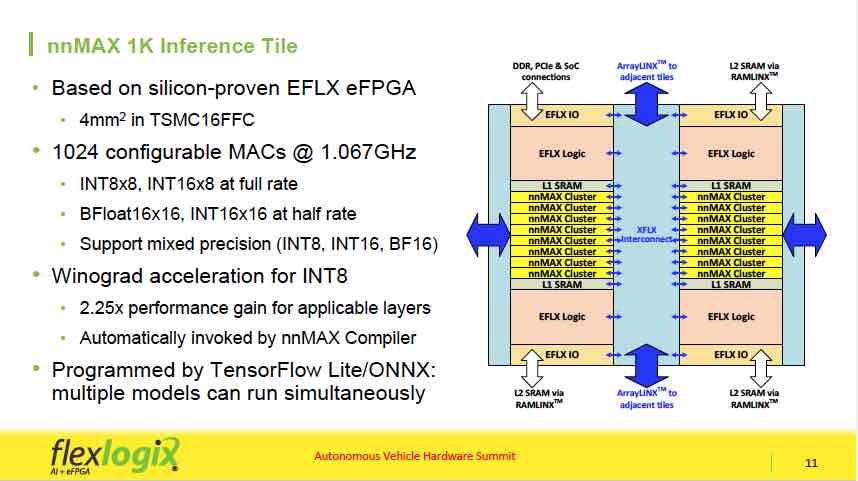

YOLO works on the whole image at once, locating and recognizing objects much faster. Of course, its processing power and memory demands make this approach more difficult to implement. At the summit Dr. Cheng C. Wang, Co-Founder & Senior VP Engineering/Software at Flex Logix Technologies outlined their approach to tackling this challenge. With a resolution of 1920 x 1080 YOLOv3 can require over 100 GOPS per frame. Flex Logix offers modular neural inference building blocks called nnMAX 1K Tiles. They can be added to their EFLX embeddable FPGA to create specialized silicon hardware configurations to maximize performance.

YOLOv3 is made up of over 100 layers, often requiring over 200 billion MAC operations. The Flex Logix nnMAX 1K tile contains 1024 MACs in clusters of 64 with weights stored locally in L0 SRAM. It supports a wide range of data types with optimizations such as Winograd Acceleration when appropriate. nnMAX tiles can be reconfigured rapidly at runtime between layers to optimize data movement. For instance, layer 0 and layer 1 operations can be combined so the intermediate data stays in SRAM. In fact, their nnMAX compiler will automatically combine layers in this manner if there are enough resources.

Flex Logix’s ArrayLinx is used to perform the interconnect remapping of thousands of wires btween nnMAX tiles. nnMAX can also connect to 1,2 or 4MB of SRAM depending on how it is configured. Optimizing SRAM configurations can allow for nnMAX arrays that perform up to 100 TOPs

One of Dr. Wang’s main points is that when running YOLOv3 on nnMAX tiles, increasing resources effectively scales performance. nnMAX can be configured in a wide variety of array sizes. His performance example is a 2MP per frame video. With nnMAX 4K and 8MB SRAM it can handle 10 fps. Going to nnMAX 8K with 32MB SRAM yields 24 fps. And an impressive 48 fps can be reached with nnMAX 16K and 64MB SRAM.

Flex Logix also supplies a complete software development environment to accompany the embedded FPGA and nnMAX tiles. The nnMAX compiler will map neural networks to Tensorflow or ONNX. Given any neural network, their software can output performance metrics based on nnMAX provisioning, the amount of SRAM, and DRAM bandwidth. This makes it easy to understand MAC utilization and the overall efficiency of the proposed architecture.

This new development from Flex Logix looks very exciting for the highly demanding automotive market. YOLO has been a game changer and is rapidly becoming a favorite for real-time image processing. YOLOv3 running on silicon designed with Flex Logix IP should provide an effective solution for meeting the demanding requirement of autonomous vehicle hardware. Their presentation from the Autonomous Vehicle Hardware Summit can be found on the Flex Logix website.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.