We (SemiWiki) have been working with Fractal for close to five years now publishing 25 blogs that have garnered more than 100,000 views. Generally speaking QA people are seen as the unsung heroes of EDA since the only time you really hear about them is when something goes wrong and a tapeout is delayed or a chip is respun.

FinFETs really changed QA by introducing many more complexities that require an increasing number of timing, power, and noise characterization checks for example. The foundries and leading edge companies are forgoing internal tools in favor of Crossfire from Fractal where they can collaborate (crowdsource) and increase QA confidence.

The fractal people and I have crossed paths many times over the years working for some of the same companies and recently I joined Fractal for business development work that you will be reading about moving forward. Fractal really is an impressive company with a unique approach to a very challenging market segment. It is my hope that Fractal can be an example to other emerging EDA companies who want to solve problems others have not addressed in a very sensible way, absolutely.

Tell me a little about your background. What brought you to running Fractal?

I started my career at Sagantec. This is how I got involved in the EDA industry. I have a financial background, then became responsible for WW customer support and Operations Management. Seven years ago we noticed a need in the design community for a standardized approach to quality assurance that could replace internal solutions, so Fractal was established by the 3 founding members and I ended up taking the CEO role.

Given our previous experience, we decided to build Fractal with the 3 founders as the only shareholders. Our strategy is to grow the company by adding customers and investing the returns from PO’s in software- and application-engineers. Looking at where we are today I would say we successfully pulled this off. Fractal now has a total of 20+ customers, 50% of which are top 20 Semiconductor companies, at the same time we always have several evaluations ongoing so we’re looking at good continued growth prospects.

Why in your view is library quality important? What is the impact of library errors?

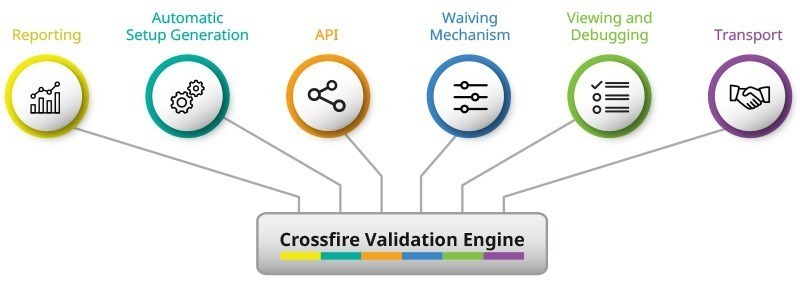

Interesting you ask about libraries because when looking at the usage of Crossfire, our IP validation solution, 25% is on Standard Cell Library and 75% is on other IP such as IOs, Analog, SRAM, Mixed Signal, SerDes etc.

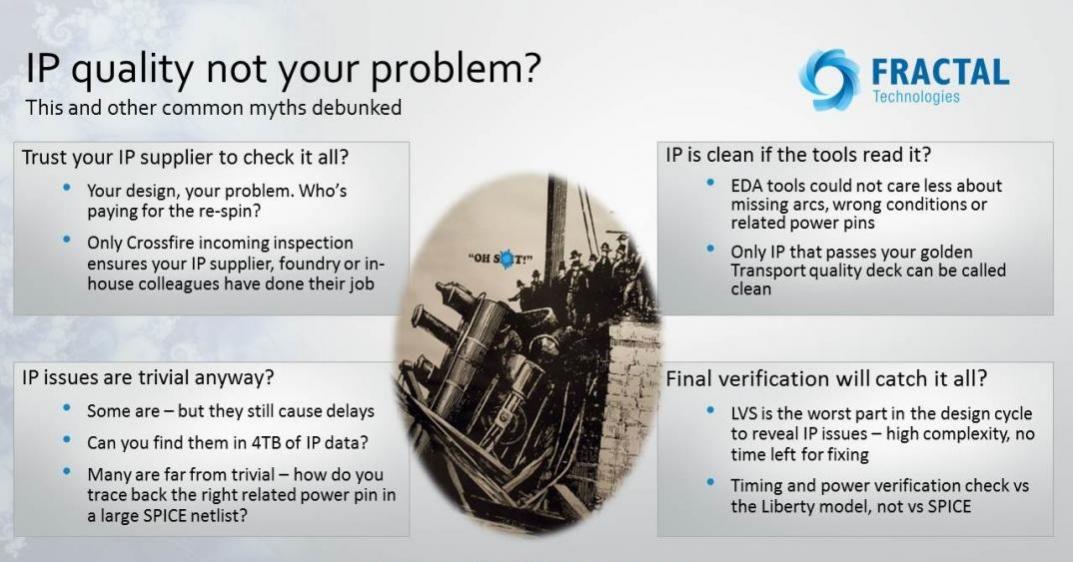

Any error in your IP design data is a potential risk for your design. Finding errors in IP models at the end of a design project could create a huge problem for meeting your tapeout deadline. For example, characterization issues are notoriously hard to spot without rigorous checking. For a standard-cell library at an advanced node we are literally talking Terabytes of .lib files. Now suppose one of those process corners had characterization issues, without QA incoming inspection these will pop-up as issues during timing and power verification. It’s then difficult very late in the design-cycle to trace these back to the characterization, get everything fixed by the library provider only to then see the real timing and power issues coming from the design.

This is why our philosophy at Fractal is to look beyond just qualifying the library or IP before it is used in the design. IP vendors and users need to be aligned on IP quality requirements. For which we have designed the Transport formalism for unambiguously describing QA requirements that can be used as a hand-off between IP consumers and provides, very much like how a DRC deck is used.

Where is this an issue – foundation IP, hard IP, hardened versions of soft IP, internal IP, external IP, …? Which of these tends to create more problems?

First a general remark applicable for all IP used by our customers. IP validation is all about the quality of your design data. Having an IP validation solution in place used by all of your internal design teams will enforce a minimum level of quality on your design data. If this same IP validation can be used on external IP providers you ensure this base-level is applicable for all IP used in your design flow. Our customers do this through Crossfire Transport, a formalism in which they can unambiguously express their quality requirements. These Transport decks can then be used by their IP providers, either internal or external, to ensure IP releases are compliant before they are shipped.

The next step is to gradually add more checks to the Transport decks and discussing the criteria with the IP providers so both parties support these QA requirements and see a benefit in making sure they are met.

This brings me to the different categories you indicated in your question. In general, regardless of the category, the problem is the same: if an IP issue shows up very late in the design flow, that has a danger of violating your schedule and at the very least demands a lot more effort from the design team which cannot be spent on the design itself. For the different IP categories the mechanisms are different though. A Hard IP block may show GDS or LEF vs SPICE mismatches when a black-box representation is replaced by the real model before final verification. A hardened Soft-IP may be slightly deviating from RTL – and perhaps for good reason but your LVS-checker or router doesn’t know that!

What we see with our customers as an important distinction is the difference between internally designed IP and externally sourced IP. Internally designed IP is almost always easier to deal with as the design groups will be using the same CAD flow, base-libraries, naming conventions, etc. So the result is very likely matched with the design in which it needs to be integrated.

External IP on the other has none of these “natural synergies”. However skilled the external designers, there’s fairly high chance some aspects of it won’t match with your design or verification tools or with the other IP blocks deployed in the design. That’s why it’s important to have these characteristics that make an IP block seamlessly integrate captured in a formalism like Transport. And it takes a couple of iterations to get there, as for many of these issues, they’re obvious to you as a user so it’s hard to imagine they’re not obvious to your IP provider.

If we can agree on a standard for IP validation at least everybody that is involved in IP quality will use the same solution which makes the exchange of setups and reports possible. If you can provide your IP supplier with an IP validation setup that meets your QA standards, and tell him to run it before shipping any IP, we are sure that problems with using external IP on your SOC will be minimal.

Why shouldn’t I expect library providers to fix their own problems?

First of all, in spite of all good intentions, library providers are not library users. Unless you explicitly inform them of your library QA requirements you cannot be sure that a library delivery is compliant.

Another part of the answer is that for library providers to fix their own problems we should give them the means to do so. From a QA point of view I am convinced that you should check your design data with a different, preferably independent tool. Checking your design data with same tool / provider that creates your design data could be a problem. How can you expect to find issues with same tool / provider that created your design data in the first place? Part of our existence is because we are tool and provider independent, Crossfire is not an IP or library generation tool, nor is it part of an SoC design flow. This makes it ideally suited as an independent, third-party, validation solution.

Don’t design teams figure out ways to patch together their own solutions? What’s wrong with that approach?

This is the historic approach we see at all our customers since there was no commercial alternative available on the market. And let’s face it, that’s what engineers are really good at: give them a problem and they’ll find some way of fixing it or working around it. Consequently, each design-company built its own IP validation solution, mostly a scripting environment. With no alternative solution available there is nothing wrong with this approach. During most of our evaluations we are benchmarked against such an internal solution.

Of course, we think proprietary solutions do have disadvantages:

- Who is maintaining this own solution? What if engineers leave the company?

- Same for, who is updating the own solution when adding new formats and checks for example because company moves to smaller technology node

- Proprietary solutions most of the time only work for internal IP, doing incoming inspection of external IP is not possible where sometimes 40-50% of your design exist of external IP

Our typical take on this subject is to integrate those proprietary checks that are really design-style or tooling specific within Crossfire, and leave the bulk of the generic checks to a future-proof tool like Crossfire. This way customer get a better overall solution and yet continue to benefit from years of investment in unique proprietary checks.

Why isn’t this a one-time check? Why do we need continuous checking?

The cornerstone of QA is a continuous feedback cycle to improve the overall quality level. If you only run incoming inspection on IP, you’ll be finding the same issues over and over again. What you need is a feedback loop that addresses the root-causes of these issues.

We strongly believe in an IP validation flow used as part of your IP design regression testing. Once you have design data available, why wait with running validation checks till end of your design project? If there are issues, you rather find them as early as possible!

How do you see some of the biggest houses using this today (no names)?

From our 20+ customers, 50% are listed as top 20 semiconductor companies. Certainly, in the last couple of years we have been able to convince these big companies to replace existing internal solutions with Crossfire, our IP validation solution. One of the reasons is that more and more companies agree that maintaining an internal validation solution is not their core business provided a state of the art commercial solution is available on the market.

Another major reason is the adoption of Transport, our formalism for specifying QA requirements. These Transport decks allows customers to export existing Crossfire setups and send them to other internal groups or external IP providers. What we now are seeing is that some of largest fabless customers are demanding Transport compliance for external IP deliveries, very much like a foundry would require DRC-correctness of the GDS. With an internal scripting environment, this will would never have been possible.

Why do you think this problem is going to become even harder and more important to solve going forward?

With smaller technology nodes we see an increase in on-chip variability. This simply drives up the data volume into the terabyte range, so also your QA tools need to be designed from the ground up to deal efficiently with that amount of data. That’s another way of saying “forget scripting”.

On the other hand we see an increasing interconnectedness of design aspects like timing, power, signal integrity and reliability. Your design needs to be optimized for all these aspects at the same time, you simply cannot leave one of them as an afterthought. This leads to increasing demands on the consistency of the different models.

Can you give us any insight into your other thoughts for future trends in the market and for Fractal?

I think that smaller technology nodes will mean more design groups turning towards Fractal as theirs internal solutions will no longer be adequate. Investing more time in such internal solution is a waste for our customers. They should focus on new, better, faster, designs and let us worry about the QA of the design data.

Another opportunity is in the shakeout happening in the providers of smaller nodes, in the end we only see very few foundries offering e.g. N7 manufacturing. This is an excellent opportunity to standardize the QA aspects of libraries and IP blocks targeted for these nodes using Transport and Crossfire as the validation tool. And even if Moore’s law would suddenly come to an end, our belief is that our customers are going to focus even more on their core competencies and their usage of 3rd party IP will remain strong.

I would say, “let’s talk Crossfire” whenever we talk about the Quality of Design Data. If everybody speaks the same Crossfire language, exchange of IP (internal and external) should become easier.

Read more about Fractal on SemiWiki

Also Read:

CTO Interview: Ty Garibay of ArterisIP

CEO Interview: Michel Villemain of Presto Engineering, Inc.

CEO Interview: Jim Gobes of Intrinsix

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.