Before the advent of convolutional neural networks (CNN), image processing was done with algorithms like HOG, SIFT and SURF. This kind of work was frequently done in data centers and server farms. To facilitate mobile and embedded processing a new class of processors was developed – the vision processor. In addition to doing a fair job of recognition, they were good at matrix math and pixel operations to filter and clean up image data or identify areas of interest. However, the real transformation for computer vision occurred when CNN won the ImageNet Large Scale Visual Recognition Challenge in 2012. The year before a the winning non-CCN approach achieved an error rate of 25.8%, which was considered quite good at the time. In 2012 the CNN called AlexNet came in first with an error rate of 16.4%.

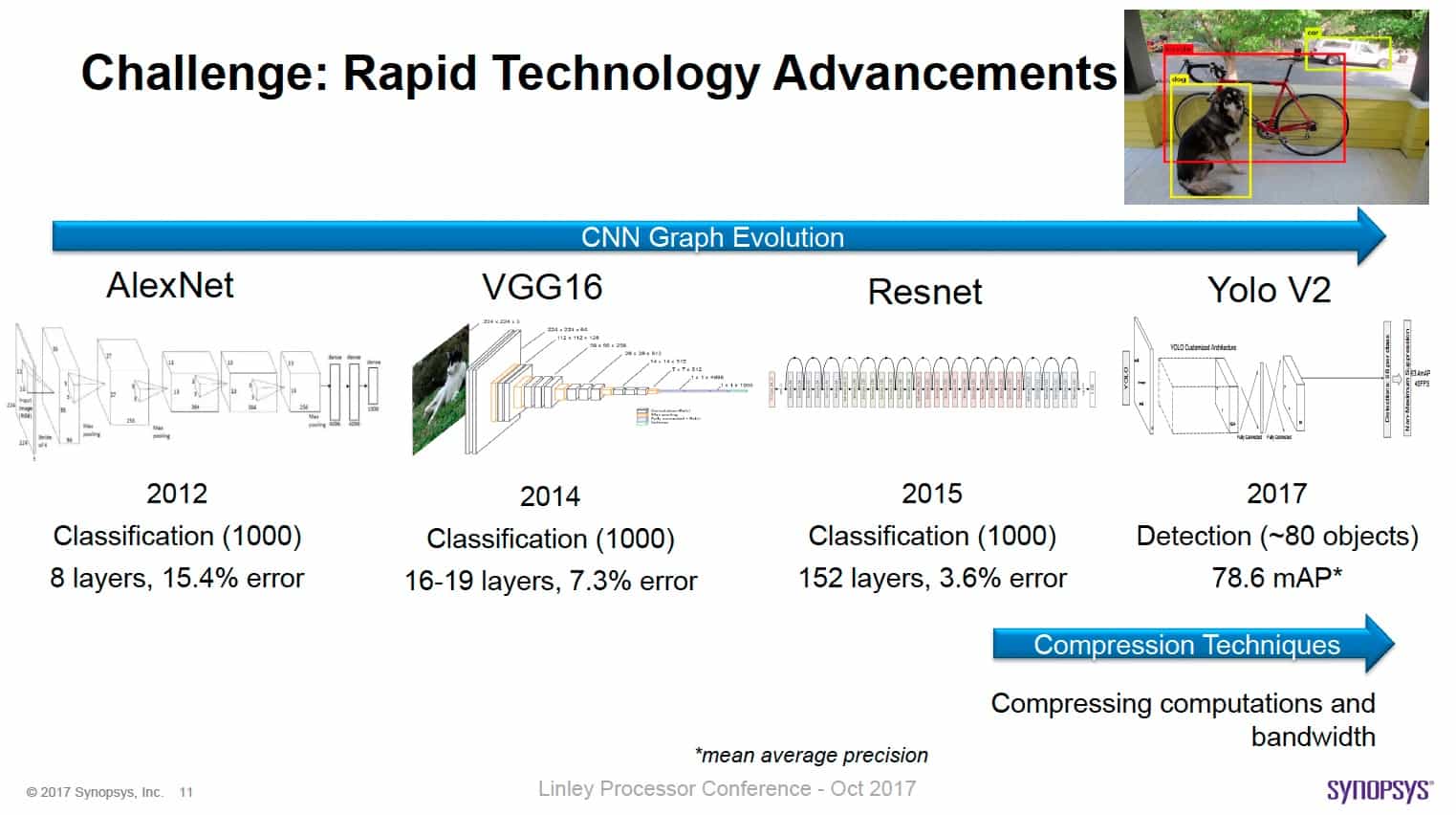

Just three years later by 2015, CNN moved up to an error rate of 3.57%, exceeding the human error rate of 5.1% on this particular task. The main force behind ImageNet is Stanford’s Fei-Fei Li, who pioneered the ImageNet image database. Its size exceeded by many orders of magnitude any previous classified image database. In many ways, it laid the foundation for the acceleration of CNN by providing a comprehensive source of training and testing data.

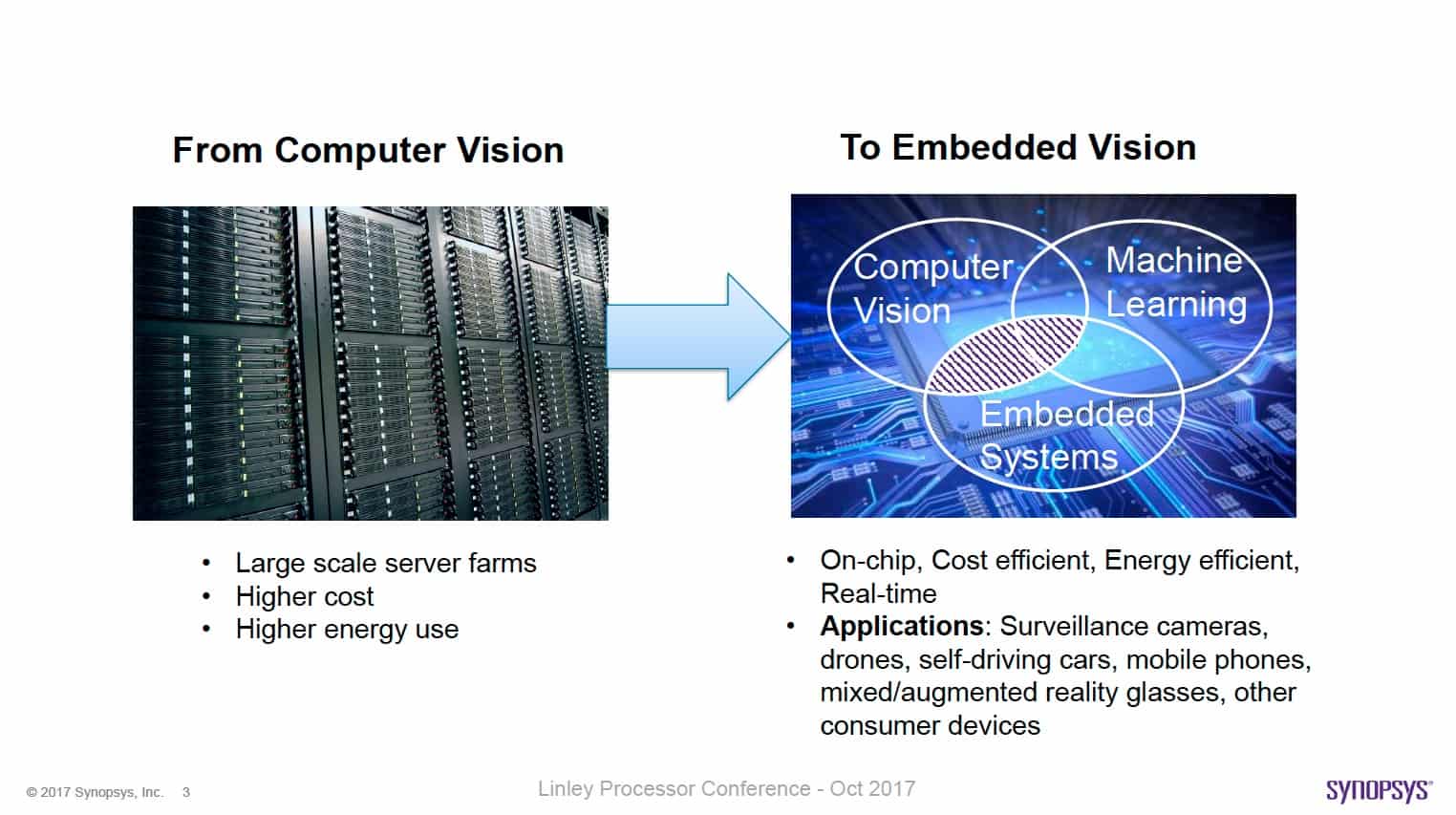

In the years since 2012 we have seen tremendous growth in the applications for CNN and image processing in general. A big part of this growth has taken computer vision out the server farm and moved it closer to where image processing is needed. At the same time the need for speed and power efficiency has increased. Computer vision is used in factories, cars, research, security system, drones, mobile phones, augmented reality, and many other applications.

At the Linley Processor Conference in Santa Clara in October Gordon Cooper, embedded vision product marketing manager at Synopsys, talked about the evolution of their vision processors to meet the rigorous demands of embedded computer vision applications. What he calls embedded vision (EV) is different than traditional computer vision. EV combines computer vision with machine learning and embedded systems. However, EV performance is affected by increasing image size and frame rates. Also, power and area constraints need to be dealt with. Finally, technology is rapidly advancing. If you look at the ImageNet competition, you will see that each year the core algorithm has changed dramatically.

Indeed, approaches for CNN have evolved rapidly. The number of layers has increased from ~ 8 to over 150. At the same time hybrid approaches are becoming more prominent by using a fusion of computer vision and CNN techniques. To assist in these newer approaches, Synopsys is offering heterogeneous processing units in their EV DesignWare® IP. These consist of scalar, vector and CNN processors in one integrated SOC IP. The Synopsys DesignWare® EV6x family offers from 1 to 4 scalar and vector processors combined with a CNN engine. This IP is supported by a standards-based software toolset with OpenCV libraries, OpenVX framework, OpenCL C compiler, C++ compiler and a CNN mapping tool.

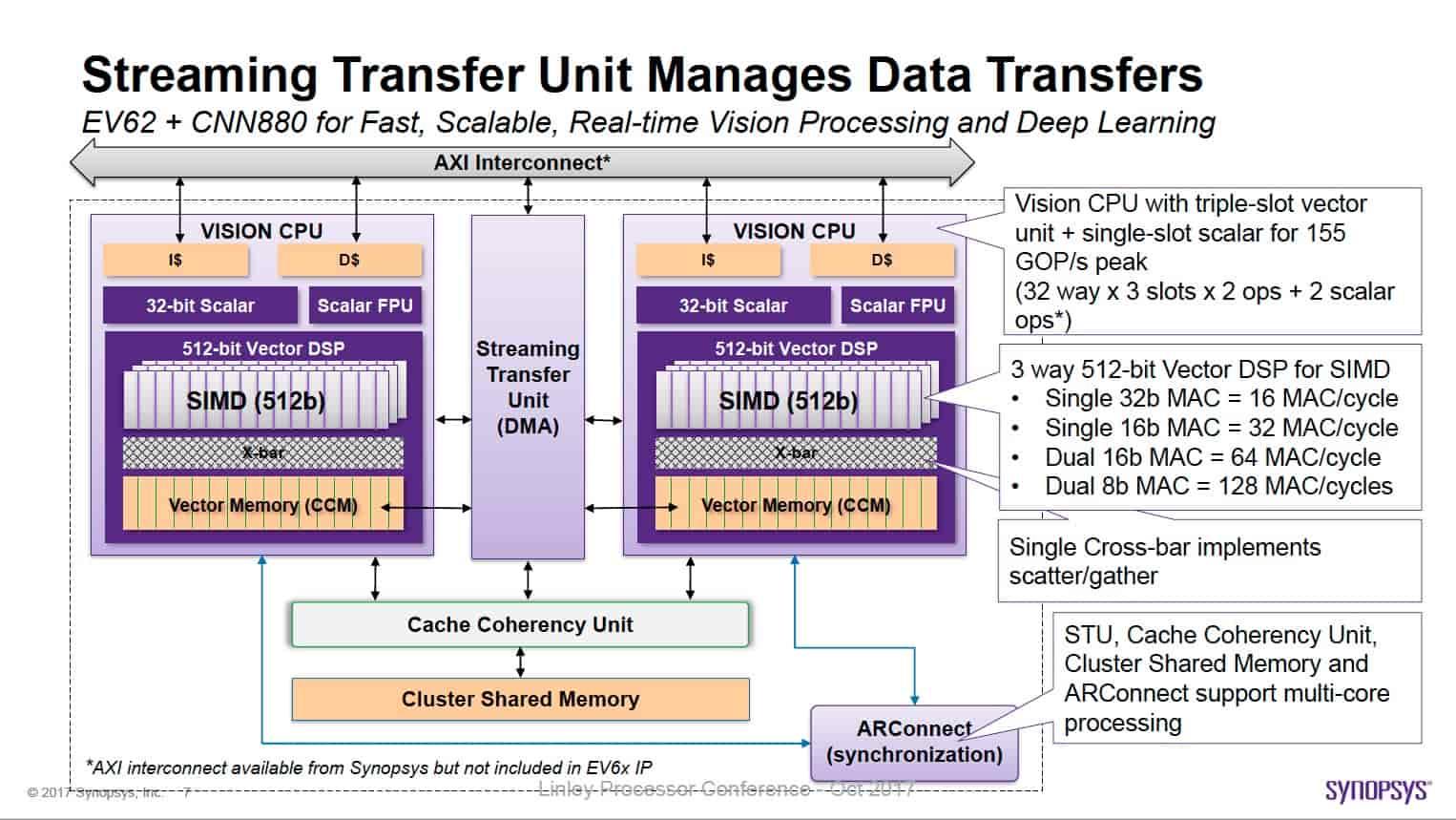

The processing units are all connected by high speed interconnect so operations can be pipelined to increase efficiency and parallelism during frame processing. The EV6x family supplies a DMA Streaming Transfer Unit that facilitates data transfers to speed up processing. Here is the system block diagram for the vision CPU’s.

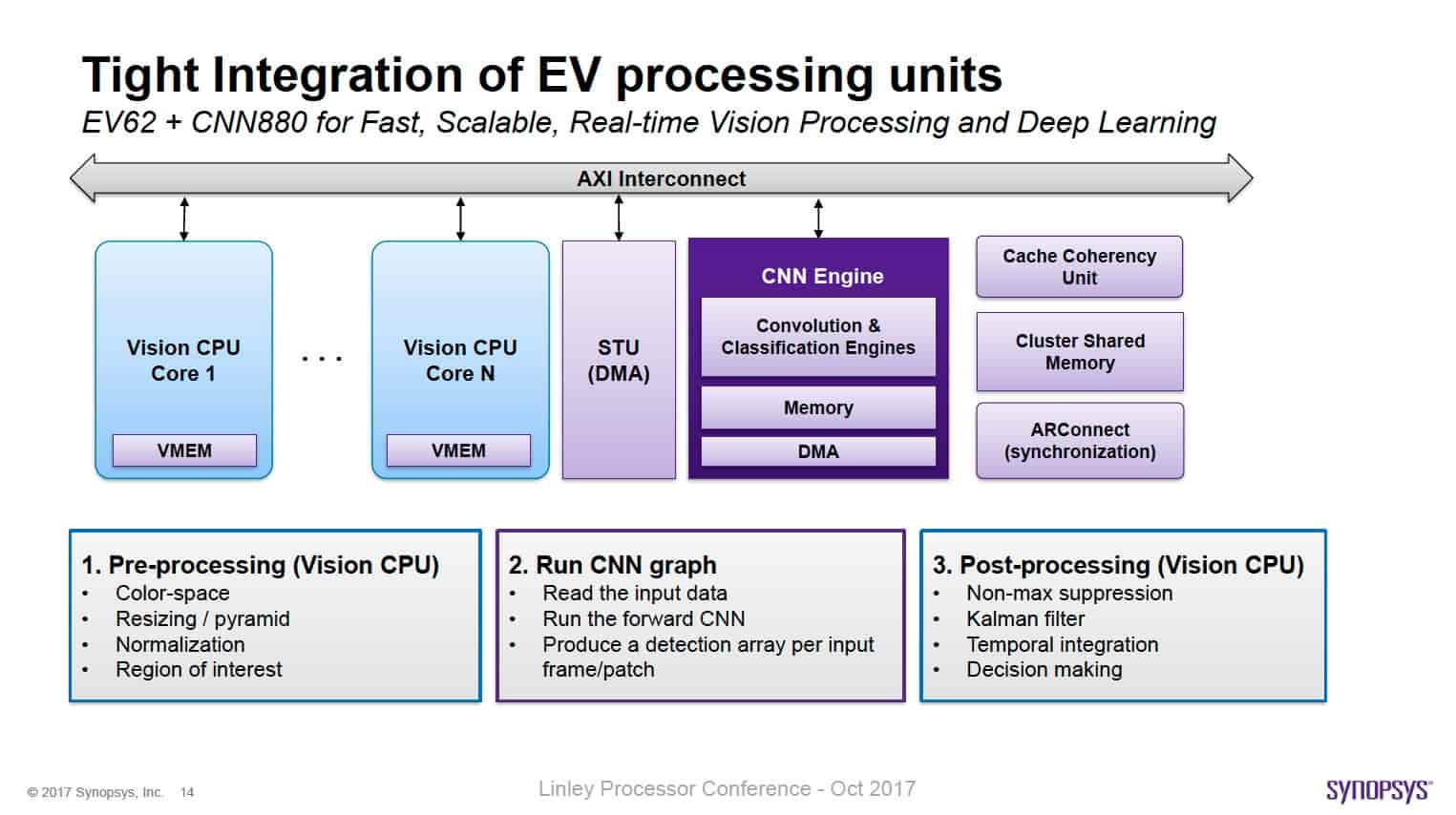

The EV6x IP is completed with the addition of the CNN880 engine. It is programmable to support a full range of CNN graphs. It can be configured to support 880, 1760 or 3520 MAC’s. Implemented in 16nm running at 1.28GHz it is capable of 4.5 TMAC/s under typical conditions. As for power efficiency, it delivers better than 2000 GMAC/s/W.

By combining the capabilities of traditional vision CPU’s with a CNN processor, the EV6x processors can achieve much higher efficiency than either alone. For instance, traditional vision processors can remove noise and perform adjustments on the input stream, improving the efficiency of the CNN processor. Also, the vision processors can help with area-of-interest identification. Finally, because these systems operate in a real-time environment, the additional processing capabilities of the EV6x can be used for taking action on the image processing results. In autonomous driving scenarios, immediate higher level real-time action may be required, such as actuating steering, braking or other emergency systems.

Embedded vision is an exciting area right now and will continue to evolve rapidly. It’s not enough to have recognition or in some cases even training done in a server farm. All the activities related to computer vision will need to be mobile. This requires hybrid architectures and computational flexibility. Any system fielded today will probably see major software updates as training and recognition algorithms evolve. Having a platform that can adapt and is easy to develop on will be essential. Synopsys certainly has taken major steps in accommodating today’s approaches and future-proofing their solutions. For more information on Synopsys computer vision IP and related development tools, please look at their website.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.