FPGAs have become a lot more capable and a lot more powerful, more closely resembling SoCs than the glue-logic we once considered them to be. Look at any big FPGA – a Xilinx Zynq, an Intel/Altera Arria or a Microsemi SmartFusion; these devices are full-blown SoCs, functionally different from an ASIC SoC only in that some of the device is programmable.

All that power greatly increases verification challenges, which is why adoption of the full range of ASIC verification techniques, including static and formal methods, is growing fast in FPGA design teams. The functional complexity of these devices overwhelms any possibility of design iteration through lab testing. One such verification problem, in which I have a little background, is analysis of clock domains crossings (CDC).

CDCs and SoCs go hand in hand since any reasonable SoC will contain multiple clock domains. There’s a domain for the main CPU (possibly multiple domains for multiple processors/accelerators), likely a separate domain for the off-chip side of each peripheral that must support a protocol which rigorously restricts clock speed options. The bus fabric communication between these devices may itself support multiple protocols each running under different clocks. Clock speeds proliferate in SoCs.

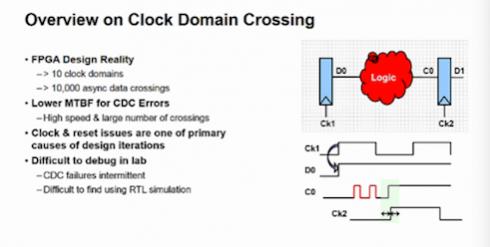

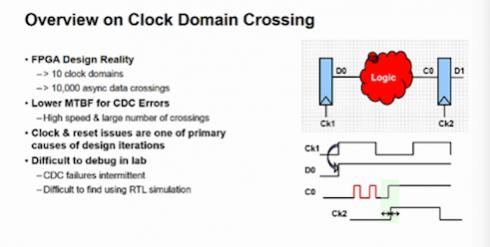

That why CDCs are found all over an SoC. At any place where data can cross from one of these domains to  another, from the off-chip side of a peripheral to the bus for example or perhaps through a bus bridge, clock domain crossings exist. It is not uncommon to find at least 10 different clocks on one of these FPGA SoCs, which can imply 10,000 or more CDCs scattered across the FPGA. The “so what” here is that CDCs, if not correctly handled, can fail very unpredictably and can be very difficult to check, either in simulation or in lab testing. But if a CDC problem escapes testing, your customers are going to find it in their design, very often in the field, as an intermittent a lock-up or a functional error. When the design is going into a space application or military avionics or any other critical application, this state of affairs is obviously less than desirable.

another, from the off-chip side of a peripheral to the bus for example or perhaps through a bus bridge, clock domain crossings exist. It is not uncommon to find at least 10 different clocks on one of these FPGA SoCs, which can imply 10,000 or more CDCs scattered across the FPGA. The “so what” here is that CDCs, if not correctly handled, can fail very unpredictably and can be very difficult to check, either in simulation or in lab testing. But if a CDC problem escapes testing, your customers are going to find it in their design, very often in the field, as an intermittent a lock-up or a functional error. When the design is going into a space application or military avionics or any other critical application, this state of affairs is obviously less than desirable.

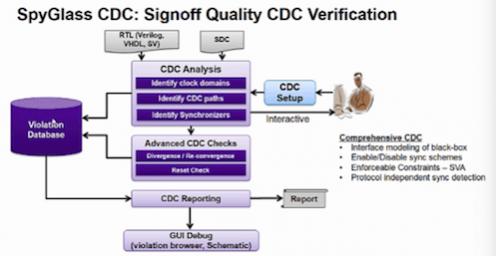

Simulation can play a role in helping minimize the chance of such failures but requires special checking and is bounded in value to the limited use-cases you can test. This has prompted significant focus on static (and formal) methods, which are use-case independent, to offer more comprehensive coverage. And in this domain, I am pretty certain that no commercial tool has the combined pedigree, technology depth and user-base offered by SpyGlass CDC, especially in ASIC design. It looks like Synopsys has been polishing the solution to extend the many years of ASIC expertise built in to the tool to FPGA design teams through collaboration with FPGA vendors and by adding methodology support for standards like DO-254.

You might reasonably question why another tool is needed, given that the FPGA vendor tools already provide support for CDC analysis. Providing some level of CDC analysis for the basic approaches to managing CDC correctness is not too difficult and this is what you can expect to find in integrated tools. But as designs and design tricks become more complex, providing useful analysis rapidly becomes much more complicated.

By way of example, integrated tools recognize basic 2-flop synchronizers as a legitimate approach to avoid metastability at crossings. But what is appropriate at any given crossing depends heavily on design context. A quasi-static signal like a configuration signal may not need to be synchronized at all; if you do synchronize you may be wasting a flop (or synchronizer cell) in each such case. Or you may choose to build your own synchronizer cell which you have established is a legitimate solution but which isn’t recognized as legitimate by the tool, so you get lots of false errors. Or perhaps you use a handshake to transfer data across the crossing. In this case, there’s no synchronizer; correct operation must be recognized through functional analysis.

Failing to handle these cases correctly quickly leads to an overwhelming number of false violations. If you must scan through hundreds or thousands of violations, you inevitably start making cursory judgements on what is suspect and what is not a problem; and that’s how real problems sneak through to production.

For many years, the SpyGlass team has worked with CDC users in the ASIC world to reduce this false violation noise through a variety of methods. One is through protocol-independent recognition, a very sophisticated analysis to handle a much wider range of synchronization methods that took many years to develop and refine (and is covered by patents).

A second aspect is in analysis of reconvergence – cases where correctly-synchronized signals from a common domain, when brought back together, can lead to loss of data. A third is in very careful and detailed support for a methodology that emphasizes up-front constraints over back-end waivers. Following this methodology ensures you will have a much more manageable task in reviewing a much smaller number of potential violations; as a result you can get to a high-confidence CDC signoff, rather than an “I hope I didn’t overlook anything”.

SpyGlass-CDC will also generate assertions which you can use to check correct synchronization in simulation; this becomes important when you want to validate functionally determined correctness at domain crossings, such as analyzing handshakes, bridges and other functionally-determined synchronization. And if you’re feeling especially brave, SpyGlass CDC also provides an extensive set of embedded formal analysis-based checks, which require very little formal expertise to use.

Synopsys has worked with Xilinx, Intel/Altera and Microsemi on tuning support in SpyGlass-CDC for these platforms. You check out more details and watch the webinar and a demo on the methodology HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.