I read at least one hour of news every day to keep informed, and I’ve read so many stories about autonomous vehicles that the same, familiar company names continue to dominate the thought leadership. What really caught my attention this month was an announcement about autonomous vehicle technology coming from Mentor Graphics, now a Siemens Business. I knew that Mentor has been serving the automotive market with pieces of ADAS (Automated Driver Assistance Systems) technology over the years for tasks like:

- Real Time Operating System

- Embedded Systems design

- Cabling and wiring harnesses

- IC design, verification, emulation

- Semiconductor IP

As an overview, consider the five levels of ADAS:

Level 0 – My previous car, a 1988 Acura Legend, where I control steering, brakes, throttle, power.

Level 1 – My present car, a 1998 Acura RL, where the car can control braking and acceleration (cruise control).

Level 2 – A system is used to automate steering and acceleration, so a driver can take hands and feet off the controls but is ready to take control.

Level 3 – Drivers are in the car, but safety-critical decisions are made by the vehicle.

Level 4 – Fully autonomous vehicle able to make an entire trip. Not all driving scenarios are covered.

Level 5 – Fully autonomous vehicle able to drive like a human in all scenarios, even using dirt roads.

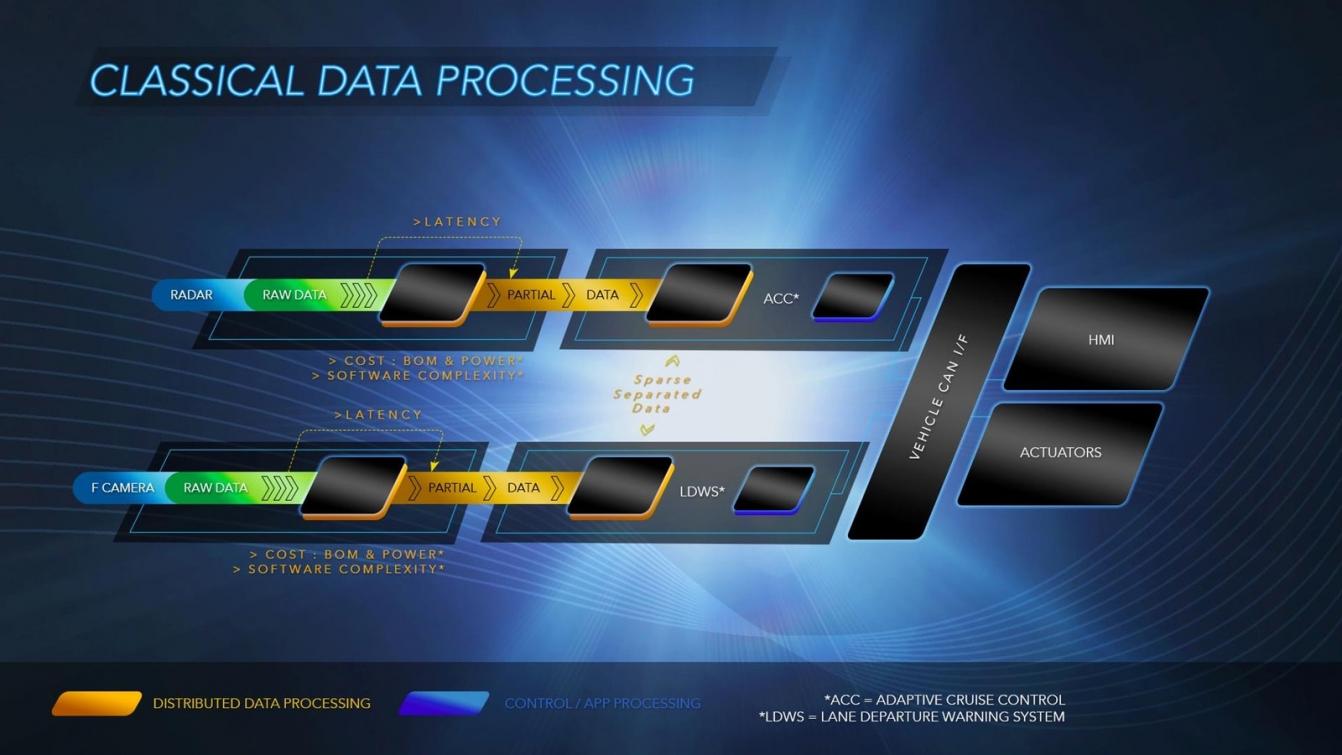

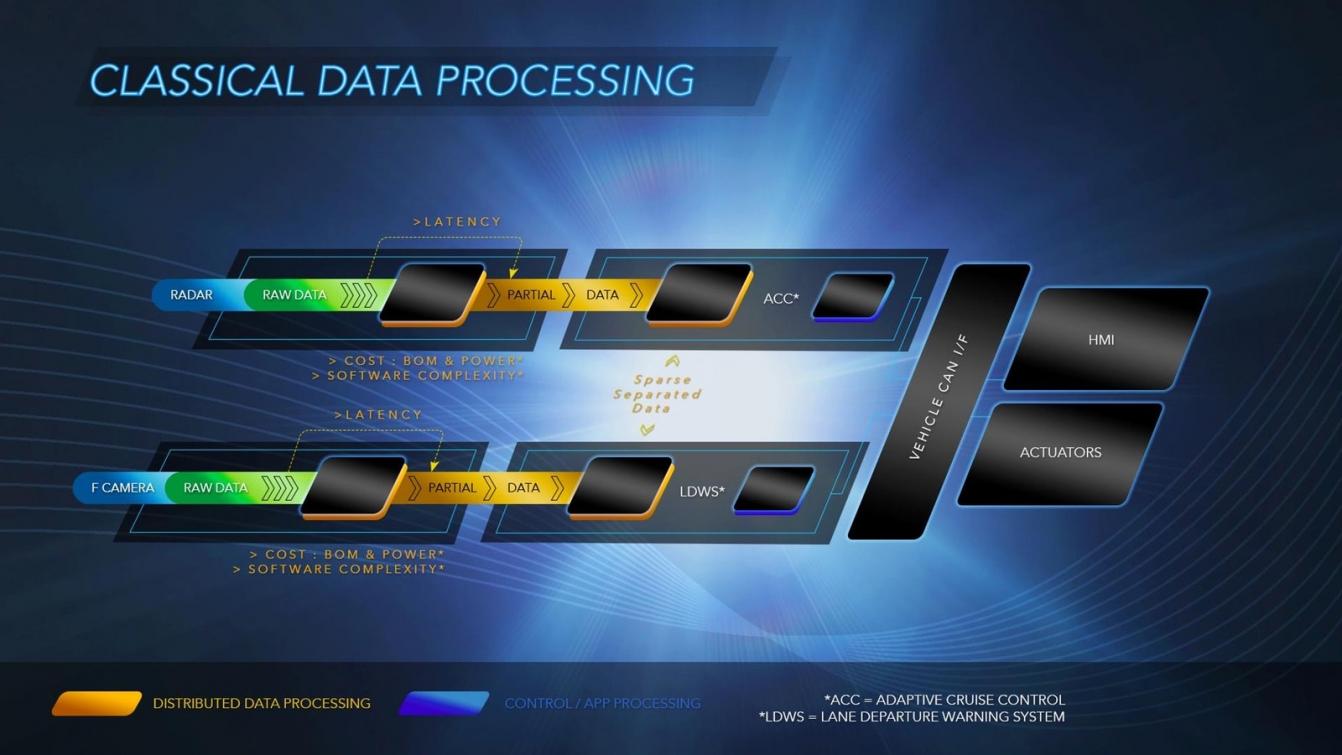

One approach used in autonomous vehicles today is to use distributed sensors and data processing as shown below:

In this approach shown on top is a Radar sensor feeding data into a converter chip, then that data being used by an Adaptive Cruise Control (ACC) system which communicates with a vehicle CAN network, finally reaching the actuators on your breaks to slow or stop the car. Shown on the bottom is a separate sensor for the front-facing camera where raw data gets converted and used by the Lane Departure Warning System (LDWS). So each automotive sensor has its own, independent filtering, conversion, and processing for streams of data which then communicate on a bus to take some physical action. Some side effects of the data processing approach are:

- System latency will be longer to transmit safety critical info

- Edge nodes don’t see all of the data, only snippets

- Increased cost and power requirements

Related blog – Help for Automotive and Safety-critical Industries

Mentor Approach

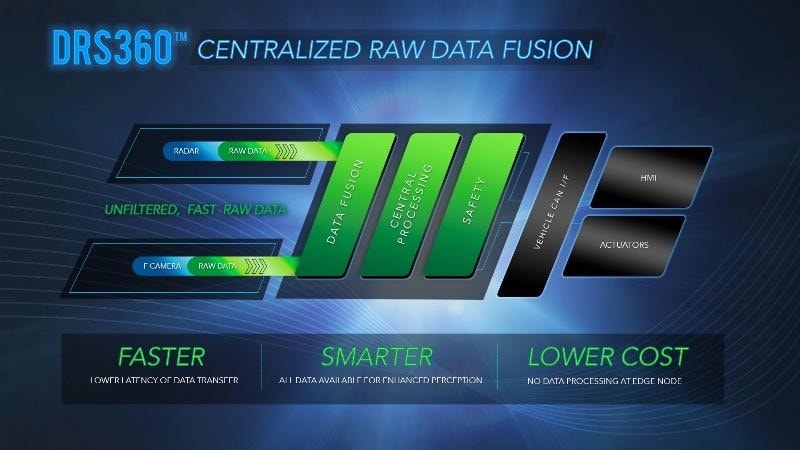

With a centralized approach Mentor has created something called the DRS360 platform that is designed for the rigors of Level 5 autonomy. Here’s how data flows in the DRS360 platform:

Raw sensor data is connected to a centralized module, allowing all processing to occur in one place using high-speed communication lines. The DRS part of DRS360 stands for Direct, Raw Sensing. So this low latency communication architecture places all of the sensor data, both raw and processed, visible and usable throughout the entire system, at all times. A benefit of DRS360 is that decisions can be made more quickly and more efficiently than other approaches. Expect to get power consumption at 100 watts through the use of neural networking in DRS360.

Related blog – Mentor Safe Program Rounds Out Automotive Position

Inside DRS360

Now that we have the big picture of this new platform from Mentor, what exactly is inside of it?

- Xilinx Zynq UltraScale+ MPSoC FPGAs

- Neural networking algorithms for machine learning

- Integration services

- Mentor IP

With DRS360 you won’t be using a separate chip to connect with each automotive sensor, instead you’ll be connecting to an FPGA. Your ADAS system can use either the x86 or ARM-based SoCs to perform common functions: sensor fusion, event detection, object detection, situational awareness, path learning and actuator control.

Next Steps

You can read more about DRS360 online, browse the press release, or watch an overview video. I’m really looking forward to the adoption rate of this DRS360 platform idea over the coming year, because the autonomous vehicle market is so popular and could be the next big driver in the semiconductor industry. Let’s see how soon before we see a vehicle with some form of a Mentor Inside logo on it.

Comments

0 Replies to “Autonomous Vehicles: Tesla, Uber, Lyft, BMW, Intel and Mentor Graphics”

You must register or log in to view/post comments.