High-level synthesis (HLS) involves the generation of an RTL hardware model from a C/C++/SystemC description. The C code is typically referred to as abehavioraloralgorithmicmodel. The C language constructs and semantics available to architects enable efficient and concise coding – the code itself is smaller, easier to write/read, and significantly, will compile and simulate much faster than a register-transfer model.

HLS has been an active area of academic and EDA industry research for some time, actually starting not long after RTL-to-gate synthesis emerged in the late 80’s. Yet, the adoption of HLS in the industry has evolved slowly, due in part to:

(1) The learning curve is not insignificant.

Designers familiar with RTL languages VHDL and/or Verilog are used to working with hardware data-typed signals; evaluation occurs when a (concurrent processor alwaysblock) statement is triggered by a change in a value in the sensitivity list, often a synchronizing clock.

Conversely, C models are typically untimed, with variables that have non-hardware specific types, such as “int” or “short”.

(2) The generated RTL is cumbersome to read and difficult to correlate to the original C source.

(3) The equivalence between C and synthesized RTL models was difficult to prove “formally”.

RTL synthesis adoption accelerated in tandem with the use of (combinational) logic cone equivalency checking (LEC) tools, using the correspondence mapping between RTL and gate-level state points – there is typically no additional inferred sequential state allowed in the “cycle-accurate” RTL coding style.

Conversely, HLS tools use (user-constraint guided) allocation of state registers, and the assignment of expressions to specific time cycles. The comparison between the C model and the generated RTL requires a richer sequential logic equivalence evaluation (SLEC).

and, perhaps the most significant deterrent to HLS adoption was:

(4) Initial users of HLS tools often attempted to apply the technology to functionality that was perhaps ill-suited to a C-language algorithm and HLS hardware definition.

I recently had the opportunity to get an update on HLS usage and methodology from Ellie Burns, Senior Product Marketing Manager, and Mike Fingeroff, HLS Technologist at Mentor Graphics. Mentor’s acquisition of Calypto Design Systems added Catapult HLS and SLEC technology to the product portfolio.

Ellie highlighted several recent examples of HLS adoption:

– NVIDIA’s TegraX1 multimedia processor utilized a combination of C and RTL, realizing significant coding and verification productivity with judicious C modeling.

– Qualcomm has used HLS for implementation of codecs, and easily integrated the high-level models into their verification flows.

– Google used HLS for the VP9 codec – 69KLOC replaced what had been estimated at 300K+ lines of RTL, with major verification speedup.

In addition, Ellie highlighted “deep learning” and wireless/broadband communications protocols as application drivers for HLS adoption. Customers have indeed found an optimum set of design functionality, to leverage the coding and verification performance gains.

Ellie added, “Architects are finding they can more easily explore different micro-architectures with high-level coding. HLS also enables designers to evaluate different technology targets more quickly – by changing PPA constraints, different implementations can be compared for overall area and power estimates, and overall algorithm latency and throughput.”

Mike provided a broad introduction to HLS, and the recommended Mentor/Calypto (v10.0) methodology.

(1) Property Checking

C models should be checked for potential HLS issues, in a manner similar to RTL linting – examples include “uninitialized reads”, “read/write bounds errors”, and “incomplete switch statements”, all issues that need to be addressed in the high-level model.

(2) Verification Coverage

Just as RTL users seek to measure testbench coverage at statement, line, branch, and (focused) expression levels, C models also utilize simulation coverage measures. This data needs to be collected in a coverage database, such as Mentor’s UCDB, so that additional RTL coverage top-off can be merged. (more on that shortly)

Catapult verification integrates with common C coverage tools, such as Squish Coco, Bullseye, and gcov. Also, note that Catapult synthesizes C assertions to RTL.

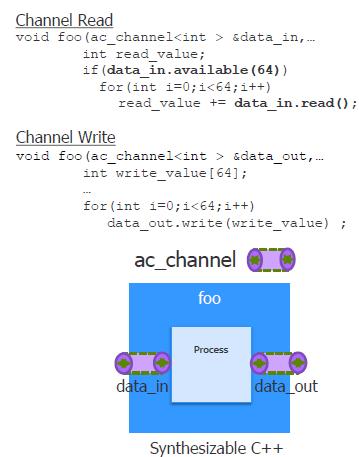

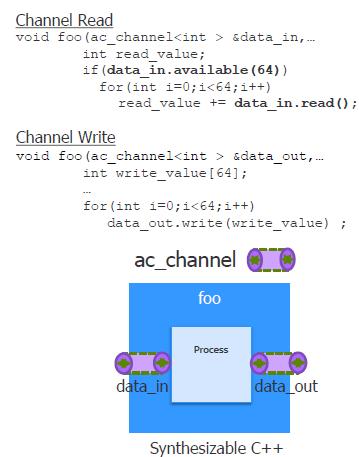

(3) Explicit interface models are added.

The Catapult methodology provides a means to define an interface model for sequential streaming data in an otherwise untimed description, as illustrated below (using class definition templates, and handshaking req/ack protocols).

(4) User-defined constraints allow PPA exploration(where Performance refers to algorithmic latency/throughput, not cycle-specific timing slack).

The heart of HLS is the translation of algorithmic looping and branching constructs in C code to a cycle-based hardware description.

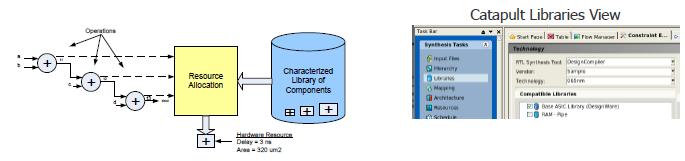

A dataflow graph (DFG) is generated from the C model, computational resources are defined, and sequential operations are scheduled. User-provided constraints guide the assignment of operations to RTL cycles, and guide the allocation of a variable type to a specific (potentially, non-standard) hardware bit width.

Design exploration involves evaluation of different loop unrolling and sequential data pipelining operations. A Gantt-chart type visualization feature in Catapult illustrates the assignment of the DFG to specific cycles.

A technology library is used to guide HLS cycle creation and scheduling. This library differs from the traditional synthesis library, as the elements need to include not just “cells”, but also operations – e.g., the PPA estimates for multiply and additional operators are needed by HLS, as illustrated below. Also, the library needs to include models for available memory array IP, including their read/write/enable signaling.

(5) RTL verification (and correspondence to the original model) is key.

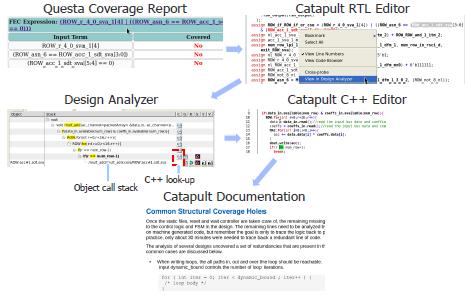

Catapult’s verification flow builds an RTL-based testbench from the original, to enable additional coverage data to be measured and merged.

Even with very high simulation coverage on the original model, Mike highlighted that the RTL model may introduce the need for additional evaluation and coverage hole examination. “A common case is the need to examine stall conditions in the RTL, which may not be part of the original verification suite. Passing dynamic bounds as a loop parameter may result in cases where the RTL hardware may support an unsupported value that needs to be examined – for example, the hardware will support a loop value of ‘0’ that may be unexpected/uncovered in the original model.”

(6) The correlation between C source and HLS RTL is extremely high.

The Catapult Design Analyzer platform shows how RTL statements correlate to the source, as illustrated below.

This is especially important for updating the C source with any subsequent RTL ECO’s.

Mike challenged my thinking though, saying, “Catapult HLS users rarely need to implement RTL ECO’s, then close the loop back to the high-level model. Functional verification and PPA optimization starts with the C model – there’s typically no need to work at the RTL level.” (The accuracy of the HLS library model estimates for macro-functions is crucial, I’ve concluded.)

HLS has indeed grown in adoption, for the implementation of complex algorithms, where both coding and simulation throughput can be improved substantially with a C/C++/SystemC model. PPA optimization guidance has improved, with easy exploration of execution pipelining and resource allocation. Equivalency can be formally demonstrated.

In short, the HLS learning curve barrier has been reduced – both with the use of established coding styles (plus linting), and with robust C and RTL verification flows.

If you are implementing an architecture and/or algorithms for which high-level modeling is appropriate (and quickly evaluating different technology targets is crucial), then HLS may be an ideal solution.

For more information on Mentor’s Catapult methodology, please follow this link (lots of whitepapers available, too).

-chipguy

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.